Scaling tech teams demands more than just rapid hiring—it requires fast onboarding, continuous upskilling, and measurable skill-building. CYPHER delivers AI-powered course creation, adaptive content, ...

Training in a restaurant environment isn't just a check-the-box task, it's fundamental to delivering a consistent, compliant, and high-quality guest experience. A learning management system (LMS) prov...

DEI training for employees focuses on promoting diversity, equity, and inclusion in the workplace. By equipping staff with tools to recognize biases, understand systemic barriers, and engage in inclus...

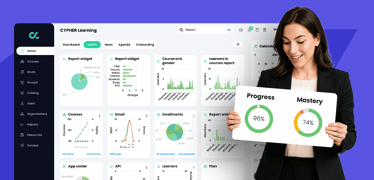

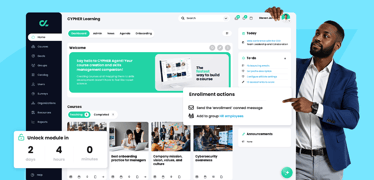

A good LMS should offer intuitive design, automation, granular tracking, and flexible reporting—with CYPHER Learning exemplifying this balance for forward-thinking organizations. Why smart tracking ma...

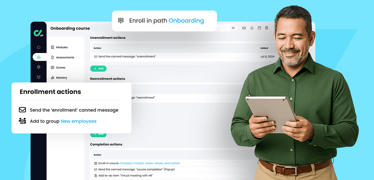

Finding the right learning management system (LMS) for onboarding new employees is about aligning platform features with your organization’s goals of efficiency, engagement, compliance, and scalabilit...

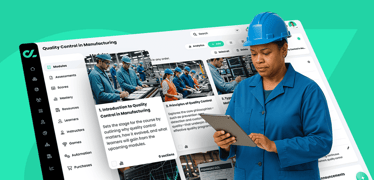

Choosing the right Learning Management System (LMS) is crucial for manufacturing companies aiming to enhance employee training, ensure compliance, and boost operational efficiency. Below are some top ...

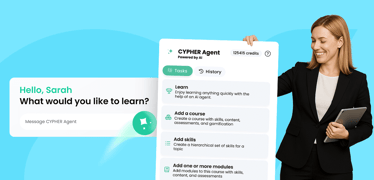

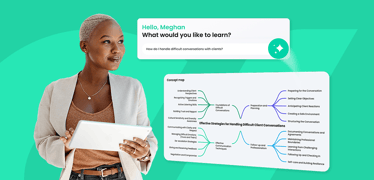

Artificial Intelligence (AI) is reshaping the learning and development (L&D) landscape by introducing AI-powered training assistants that streamline course creation, personalize learning experiences, ...

For learning and development (L&D) leaders in mid‑size businesses, the challenge isn’t just finding an LMS—it’s finding one that balances flexibility, automation, and user experience without overwhelm...

An LMS built for remote teams must deliver personalized and role-based skills training across diverse groups—employees, partners, and customers—while connecting learning to business objectives through...

In another era, workplace training meant long classroom sessions, bulky manuals, and one-size-fits-all instructional methods. But today’s enterprises can’t afford to train like it’s 1999. With a hybri...

When high-performing employees leave because they don’t see a path forward, it can be disappointing and costly. That’s why more organizations are turning to employee development plans to retain amazin...

An AI-powered LMS acts like a smart, proactive assistant for your training programs—streamlining content delivery, adapting to individual learner needs, and helping teams stay on track without constan...

Modern learners want more than static, one-size-fits-all training. They expect and crave interactive, personalized experiences that match their needs and learning styles. This shift is powered by adva...

In today’s fast-moving corporate world, HR leaders at large companies are under increasing pressure to align workforce development with strategic business outcomes. From supporting hybrid work environ...

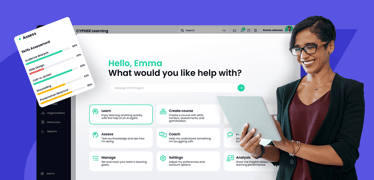

If you’re looking for an LMS that combines the power of AI with ease of use for creators and learners, look no further than CYPHER Learning. With its built-in AI agent—CYPHER Agent for creators and CY...